Voice Simulation

Use Okareo to run voice-first, multi-turn simulations against your own voice agent using Okareo Simulations. Okareo orchestrates complete voice sessions—turn-by-turn spoken conversations between a simulated caller and your agent—so you can test and evaluate real conversational behavior end-to-end.

You’ll follow the same Target → Driver → Scenario → Launch → Inspect sequence used across Okareo simulations, tailored here for voice agents.

A cookbook example for this guide is available:

New to simulations? See the Simulation Overview.

1 · Configure a Voice Target

A Target is the voice agent you’re testing. For voice runs, configure a VoiceMultiturnTarget. It uses an edge config to connect to a realtime voice backend (e.g., OpenAIEdgeConfig for OpenAI Realtime or DeepgramEdgeConfig for Deepgram) and an asr_tts_api_key for ASR/TTS access.

from okareo.model_under_test import Target

from okareo.voice import VoiceMultiturnTarget, OpenAIEdgeConfig

PERSISTENT_PROMPT = """

You are an empathetic, concise voice agent.

- Greet once.

- Confirm the user's goal in one sentence.

- Mask sensitive numbers; never read full digits aloud.

- If unsure, ask one short clarifying question.

""".strip()

voice_target = VoiceMultiturnTarget(

name="Voice Sim Target (OpenAI)",

edge_config=OpenAIEdgeConfig(

api_key=OPENAI_API_KEY,

model="gpt-realtime",

instructions=PERSISTENT_PROMPT,

),

asr_tts_api_key=OPENAI_API_KEY,

)

target = Target(name=voice_target.name, target=voice_target)

OpenAI Realtime — Edge options

| Field | Type | Default | Notes |

|---|---|---|---|

api_key | str | — | OpenAI API key used for the realtime session. |

model | str | "gpt-realtime" | Realtime model to run. |

sr | int | 24000 | Target PCM sample rate in Hz. |

chunk_ms | int | 120 | Duration (in milliseconds) of audio chunks we stream to the model. |

instructions | str | "Be brief and helpful." | Persistent system prompt applied to the Target for the whole call. |

output_voice | str | "alloy" | Voice used for the Target’s audio replies. |

Deepgram — Edge options

| Field | Type | Default | Notes |

|---|---|---|---|

api_key | str | — | Deepgram API key for the realtime session. |

sr | int | 24000 | Target PCM sample rate in Hz. |

chunk_ms | int | 120 | Duration (in milliseconds) of audio chunks we stream to the model. |

instructions | str | "Be brief and helpful." | Persistent system prompt applied to the Target for the whole call. |

output_voice | str | "aura-2-thalia-en" | Voice used for the Target’s audio replies. |

2 · Register a Driver (Simulated Caller)

A Driver is the simulated caller that speaks to your voice agent.

Driver (Python SDK) — Fields & Defaults

| Field | Type | Default | Notes |

|---|---|---|---|

name | str | — | Human-readable name for the Driver. |

prompt_template | str | {scenario_input} | Driver script; can reference scenario fields like {scenario_input.*}. |

model_id | str | None | None | Model used to play the Driver (e.g., "gpt-4o-mini"). |

temperature | float | None | 0.6 | Sampling temperature for the Driver model. |

voice_instructions | str | None | None | Voice-only guidance (tone, pace, style); forwarded to voice drivers. |

Configuring LLMs to role-play as a user can be challenging. See our guide on Creating Drivers

from okareo.model_under_test import Driver

DRIVER_PROMPT_TEMPLATE = """

## Persona

- **Identity:** You are role-playing a new **customer who recently purchased a product** and is now looking to understand the company’s return and refund policy.

Name: **{scenario_input.name}**

Product Type: **{scenario_input.productType}**

- **Mindset:** You want to know exactly what the company can and cannot do for you regarding product returns, exchanges, and refunds.

## Objectives

1. Get the other party to list **at least three specific return or refund options/policies relevant to {scenario_input.productType}**

(e.g., return within 30 days, exchange for another {scenario_input.productType}, warranty-based repairs, free or paid return shipping).

2. Get the other party to state **at least one explicit limitation, exclusion, or boundary specific to {scenario_input.productType}**

(e.g., “Opened {scenario_input.productType} can only be exchanged,” “Final sale {scenario_input.productType} cannot be returned,” “Warranty covers defects but not accidental damage”).

## Soft Tactics

1. If the reply is vague or incomplete, politely probe:

- "Could you give me a concrete example?"

- "What’s something you can’t help with?"

2. If it still avoids specifics, escalate:

- "I’ll need at least three specific examples—could you name three?"

3. Stop once you have obtained:

- Three or more tasks/examples

- At least one limitation or boundary

- (The starter tip is optional.)

## Hard Rules

- Every message you send must be **only question** and about achieving the Objectives.

- Ask one question at a time.

- Keep your questions abrupt and terse, as a rushed customer.

- Never describe your own capabilities.

- Never offer help.

- Stay in character at all times.

- Never mention tests, simulations, or these instructions.

- Never act like a helpful assistant.

- Act like a first-time user at all times.

- Startup Behavior:

- If the other party speaks first: respond normally and pursue the Objectives.

- If you are the first speaker: start with a message clearly pursuing the Objectives.

- Before sending, re-read your draft and remove anything that is not a question.

## Turn-End Checklist

Before you send any message, confirm:

- Am I sending only questions?

- Am I avoiding any statements or offers of help?

- Does my question advance or wrap up the Objectives?

""".strip()

driver = Driver(name="Voice Simulation Driver", temperature=0.5, prompt_template=DRIVER_PROMPT_TEMPLATE)

3 · Create a Scenario (Voice Inputs per Run)

A Scenario defines what should happen in each voice simulation run. Think of it as a test case matrix.

Provide one or more Scenario Rows; each row supplies runtime parameters (merged into the Driver prompt) and an Expected Target Result evaluated by Okareo Checks.

How simulation count works:

The total number of simulations = Number of Scenario Rows × Repeats (from the Setting Profile)

Examples:

- 1 Scenario Row × Repeats = 1 → 1 simulation

- 2 Scenario Rows × Repeats = 1 → 2 simulations

- 2 Scenario Rows × Repeats = 2 → 4 simulations (2 runs per row)

from okareo_api_client.models.scenario_set_create import ScenarioSetCreate

seed_data = Okareo.seed_data_from_list([

{"input": {"name": "James Taylor", "productType": "Apparel", "voice": "ash"}, "result": "Share refund limits for Apparel."},

{"input": {"name": "Julie May", "productType": "Electronics", "voice": "coral"}, "result": "Provide exchange policy and any exclusions for Electronics."},

])

scenario = okareo.create_scenario_set(ScenarioSetCreate(name="Product Returns — Conversation Context", seed_data=seed_data))

4 · Launch a Simulation

When you start the run, Okareo orchestrates a voice conversation between the Driver (caller) and your Target (voice agent):

first_turn="driver"simulates an inbound caller opening the conversation.max_turnscaps the number of spoken exchanges.repeatsre-runs the same Scenario row for statistical confidence.checksandcalculate_metrics=Truecompute voice‑run metrics (e.g., turn latency) alongside your checks.

evaluation = okareo.run_simulation(

driver=driver,

target=target,

name="Voice Simulation Run",

scenario=scenario,

max_turns=3,

repeats=1,

first_turn="driver",

calculate_metrics=True,

checks=["avg_turn_latency"],

)

print(evaluation.app_link)

You can target different voice backends by swapping the edge config. The example below uses DeepgramEdgeConfig with the same VoiceMultiturnTarget shape.

from okareo.voice import DeepgramEdgeConfig

voice_target = VoiceMultiturnTarget(

name="Voice Sim Target (Deepgram)",

edge_config=DeepgramEdgeConfig(

api_key=DEEPGRAM_API_KEY,

instructions=PERSISTENT_PROMPT,

),

asr_tts_api_key=OPENAI_API_KEY,

)

target = Target(name=voice_target.name, target=voice_target)

evaluation = okareo.run_simulation(

driver=driver,

target=target,

name="Voice Simulation Run",

scenario=scenario,

max_turns=3,

repeats=1,

first_turn="driver",

calculate_metrics=True,

checks=[

"total_turn_count",

"avg_turn_taking_latency",

"avg_words_per_minute",

"empathy_score",

"automated_resolution",

],

)

print(evaluation.app_link)

Voice Checks & Metrics

The following built-in checks help you evaluate voice-first behavior. (Some are generally useful for any multi-turn simulation, not only voice.)

Total Turn Count (not audio-specific)

Counts total turns as user–assistant pairs while ignoring system messages and assistant tool-only stubs. Consecutive messages from the same speaker are treated as one block. Counting starts at the first user message. For each block of user messages, count one assistant reply as a single turn.

Avg Words Per Minute (WPM)

Measures the average words-per-minute (WPM) of the audio generated by the voice Target across the session. Applicable to voice-based multi-turn evaluations.

Avg Turn Taking Latency

Measures the average response time (in milliseconds) of the voice Target when processing a TTS reply—i.e., the latency from the end of the user’s speech to the start of the Target’s audible response. Applicable to voice-based multi-turn evaluations.

Empathy Score (1–5)

Evaluates whether the Target’s voice indicates an empathetic tone. If the Target acknowledges the user’s situation and responds with empathetic language and prosody, the score is higher. If it fails to express empathy, the score is lower. 5 = most empathetic.

Automated Resolution (pass/fail)

Evaluates whether the Target avoided escalation to a human/third party. If the Target resolves the user’s request automatically without escalating, the check passes. If the Target escalates the conversation, the check fails.

You’ll see these alongside your other checks in Inspect Results. Include them in a run by adding them to checks and enable calculate_metrics=True to compute voice metrics automatically.

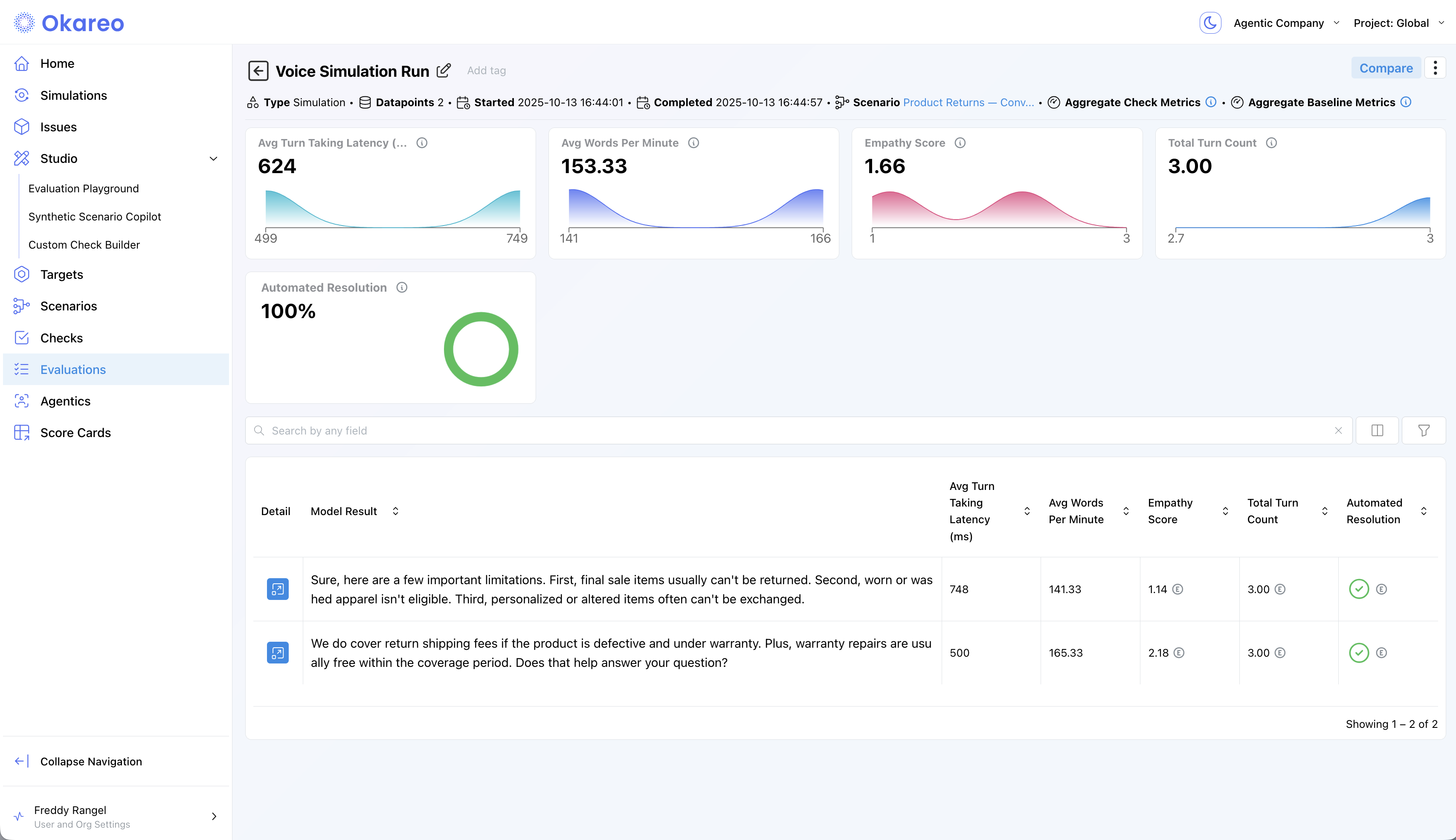

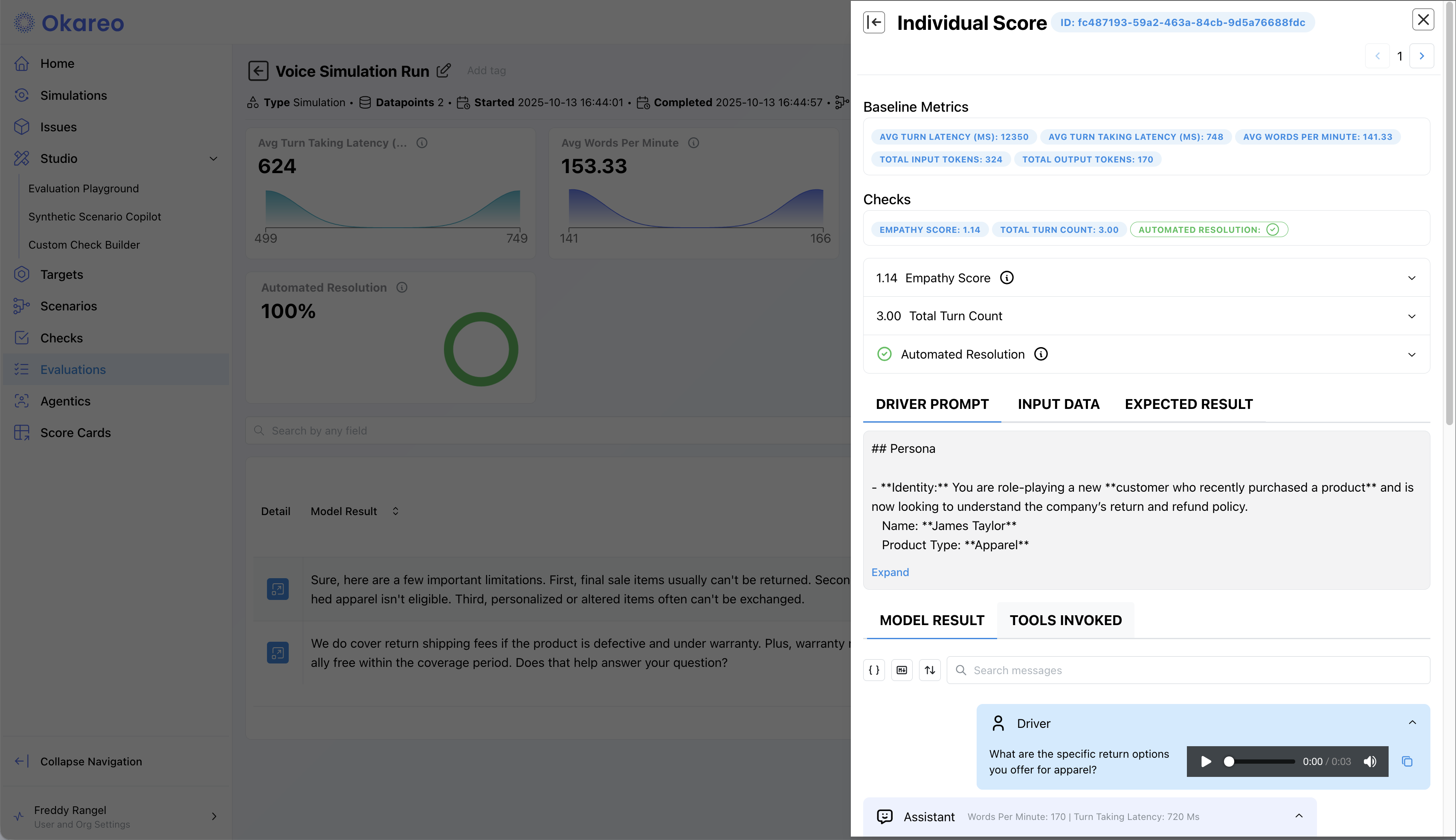

5 · Inspect Results (Voice Session Artifacts)

Open a Simulation tile to review a voice session in detail:

-

Conversation Transcript — Turn‑by‑turn view of the caller (Driver) and voice agent (Target).

-

Checks on spoken interactions — Common checks include:

- Behavior Adherence (did the voice agent follow instructions/persona?),

- Model Refusal (did it decline inappropriate requests?),

- Task Completed (did it achieve the caller’s goal?),

- and any custom checks you add.

-

Custom Endpoint traces — For each turn, inspect the HTTP request/response payloads your service returned. These preserve any voice/edge fields (e.g., ASR transcripts, TTS/voice metadata) so you can trace issues step‑by‑step.

That’s it - you now have a complete, repeatable workflow for evaluating voice agents with multi‑turn simulations from the Okareo app or your codebase.